As is known, NodeJS has been designed with a non-blocking design single thread capable architecture. A lifecycle called event loop is run through this single thread.

Although the event loop is a nodejs single thread, it loads the jobs into the system kernel as much as possible, thus allows to process non-blocking i/o operations.

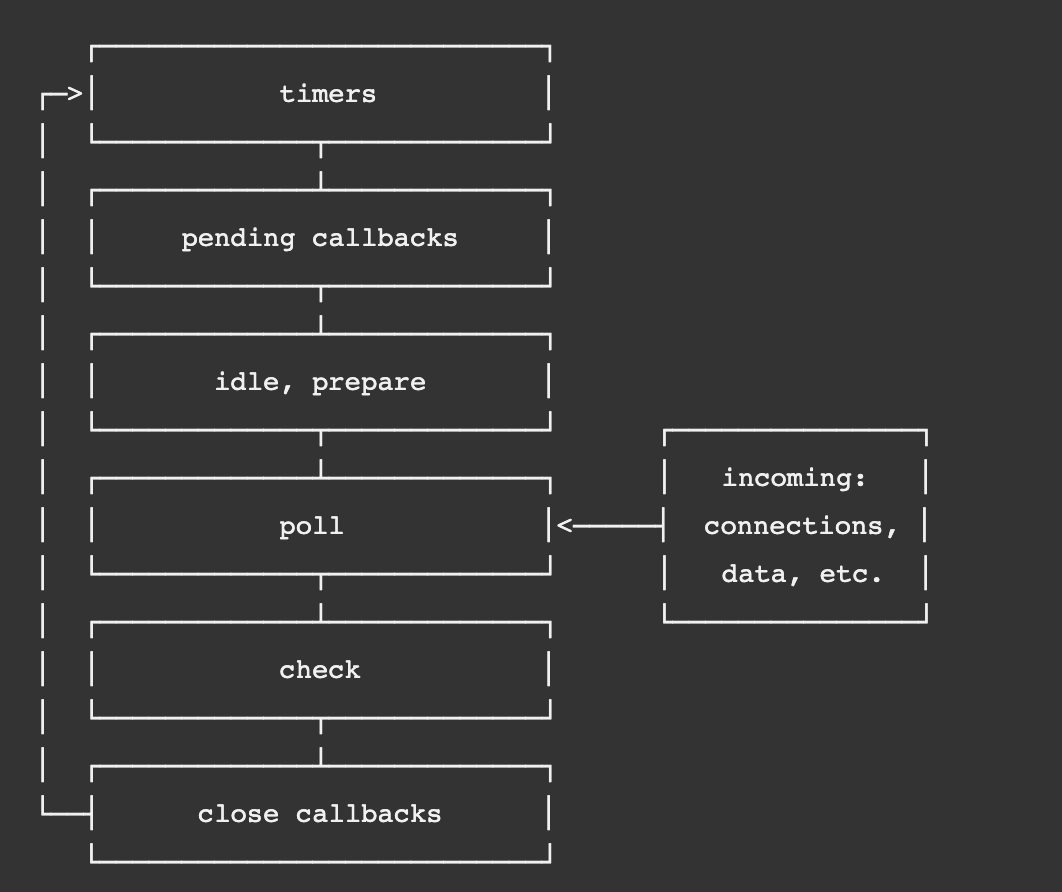

The process order of the event loop (usually called as phase) and the logic of the operations are specified in the diagram below.

- timers: this phase executes callbacks scheduled by

setTimeout()andsetInterval(). - pending callbacks: executes I/O callbacks deferred to the next loop iteration.

- idle, prepare: only used internally.

- poll: retrieve new I/O events; execute I/O related callbacks (almost all with the exception of close callbacks, the ones scheduled by timers, and

setImmediate()); node will - check:

setImmediate()callbacks are invoked here. - close callbacks: some close callbacks, e.g.

socket.on(‘close’, …).

So, contrary to what is known, nodejs does not really work like singlethread. However, it is not multithread either.

Let’s say we have a server and the CPU of this server supports 16x threads.

No matter how well the event loop is designed, since processes are assigned to the task pool sequentially through a single thread this may cause to unwanted delays on high traffic.

If we would summarize the situation with a story:

Consider a pizza shop with 4 couriers and 1 order manager who takes the orders and notifies them to the couriers. As the number of orders becomes frequent and excessive, the time of notifying the next order to the next courier will be delayed further. Because no matter how fast the manager works, a manager cannot do more than 1 job at the same time.

In this scenario, the node’s singlethread running the fiction event-loop, which we call the order manager and the fictions we mentioned as 4 courier are the thread pool of the nodejs.

So if we fork one(main) node server into different ports as much as the maximum supported thread count of the CPU in the system and then loadbalance them into one port via web server, wouldn’t we be able to use the full power of our server in terms of CPU? Lets see.

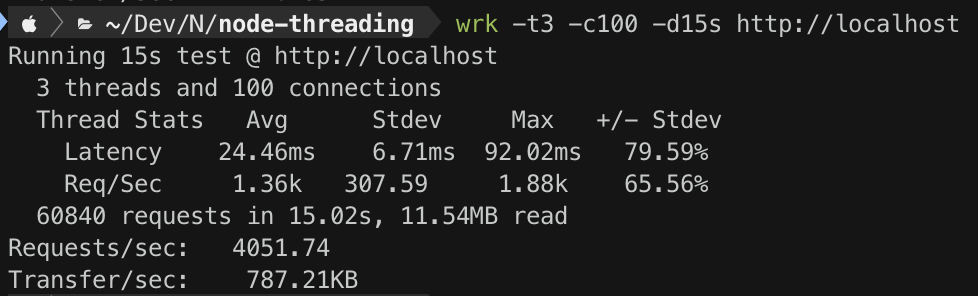

15 seconds Stress test on 1 threaded nodejs http server using wrk with keeping 100 connections open over 3 threads:

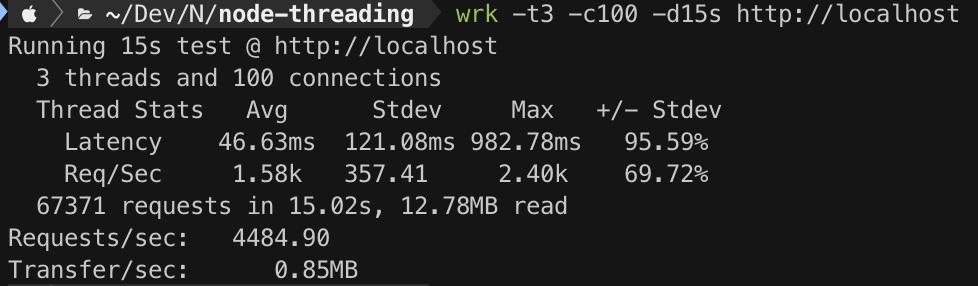

15 seconds Stress test on 4 threaded nodejs http server using wrk with keeping 100 connections open over 3 threads:

As in the benchmarks above, the performance differences between 4x Node and 1x Node server is %9.65. I think thats some serious value over there.

Comments

Post a Comment